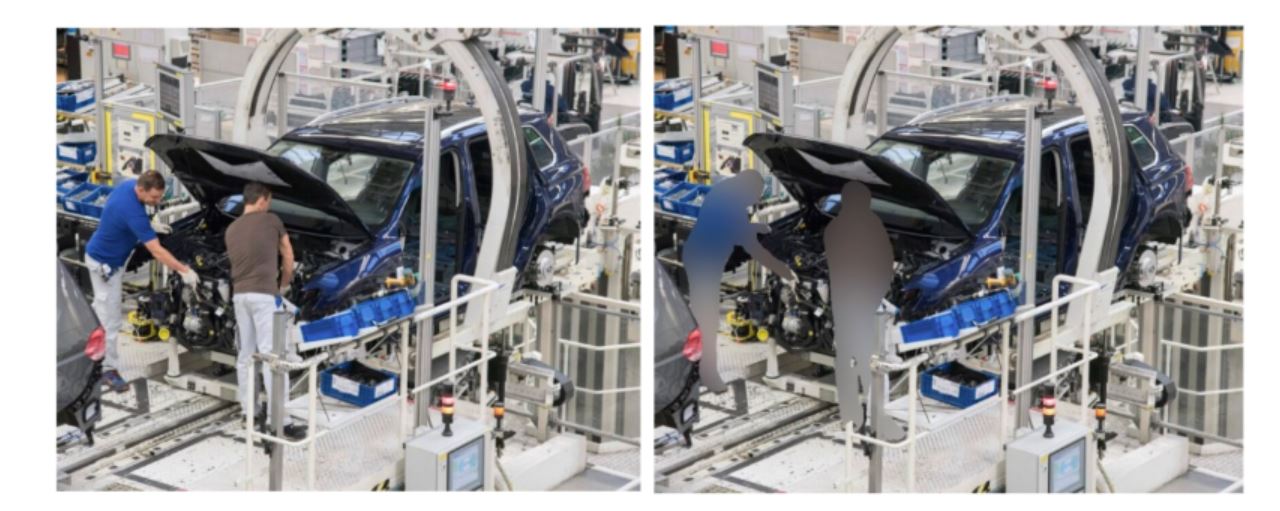

You-are-there experiences are what immersive media promise to deliver with real-time mixed reality (MR). This new format uses volumetric video data to create 3D images as an event is occurring. Further, multiple people can view the image from different angles on a range of devices.

Capturing Reality in 3D Is Hard

Media companies have been early adopters of technology formats such as 360° video, virtual reality (VR), augmented reality (AR), and MR. Regular, as opposed to real-time, MR blends physical and digital objects in 3D and is usually produced in dedicated spaces with green screens and hundreds of precisely calibrated cameras. Processing the massive amounts of volumetric data captured in each scene requires hours or even days of postproduction time. Trying to do MR in real-time has proven even more technically and economically challenging for content developers and, so far, made the format impractical. “Capturing and synchronizing high-resolution, high-frame-rate video in a controlled space is challenging enough,” said John Ilett, CEO and founder of Emergent Vision Technologies, a manufacturer of high-speed imaging cameras. “Doing these things in real-time in live venues is even harder.”

Deep Learning Needs an Assist

One startup thought it had a strategy for overcoming those issues. Condense Reality, a volumetric video company, had a plan for capturing objects, reconstructing scenes, and streaming MR content at multiple resolutions to end-user devices. From start to finish, each frame in a live stream would require only milliseconds to complete. “Our software calculates the size and shape of objects in the scene,” said Condense Reality CEO Nick Fellingham. “If there are any objects the cameras cannot see, the software uses deep learning to fill in the blanks and throw out what isn’t needed, and then stream 3D motion images to phones, tablets, computers, game consoles, headsets, and smart TVs and glasses.” But there was a hitch. For the software to work in real-world application, Fellingham needed a high-resolution, high-frame-rate camera that content creators could set up easily in a sports stadium, concert venue, or remote location. The company tested cameras, but the models used severely limited data throughput and the cable distance between the cameras and the system’s data processing unit. To move forward, Condense Reality needed a broadcast-quality camera that could handle volumetric data at high speeds.

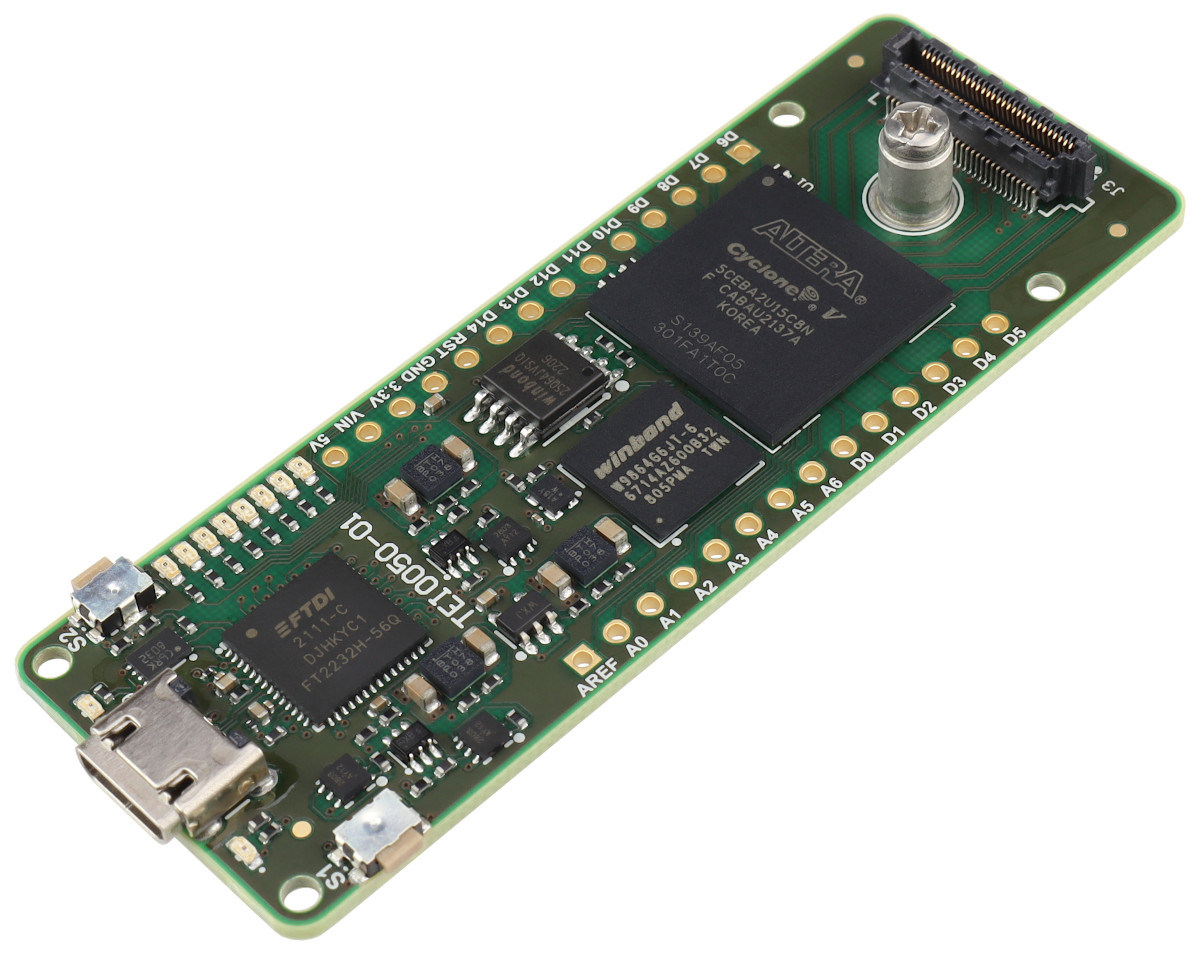

High-Speed Cameras Deliver

In 2020, Fellingham learned that Emergent Vision Technologies was releasing several new cameras with high-resolution image sensors. These cameras included models with SFP28-25GigE, QSFP28-50GigE and QSFP-100GigE interface options, all of which offer cabling options to cover any length. “Our cameras deliver quality images at high-speeds and high-data rates,” said Ilett. “They capitalize on advances in sensor technology and incorporate firmware we developed so the cameras can achieve the sensor’s full frame rate.” The images in an MR experience should be captured at an extremely high frame rate and resolution. With the new cameras, Fellingham was able to assemble a commercially viable system. “High-speed GigE cameras are what we need to get the data off the cameras quickly and stream it”, he noted. High-speed capture is particularly important for sports use cases where the most exciting action is occurring in the literal blink-of-an-eye. If capturing a golf swing, for example, a frame rate of 30fps is likely to only “see” the beginning and end of the swing, which significantly reduces the quality of the volumetric content. “We are not using these cameras for inspecting parts in a factory, we are using them to create entertainment experiences,” said Fellingham. “When the speed [fps] increases, the quality increases for fast-moving action, the output we generate is better, and the experience improves overall.”

4k cameras with 542fps at 21MP

Condense Reality serves as a system integrator for customer projects. A standard system uses 32 cameras, one high-speed network switch from Mellanox, and a single graphics processing unit (GPU) from Nvidia to cover a 7x7m capture. The company worked with Emergent Vision Technologies on putting together the optimal system for volumetric capture. “We don’t necessarily want to be committed to very specific hardware configurations, but by working with the Emergent team and testing different components, we’ve found that Nvidia and Mellanox work best for us.” Along with implementing its technology, the company is working to increase the capture area for MR while maintaining throughput and quality. “When you start to get bigger than a 10x10m area, 4K cameras don’t cut it,” Fellingham said. “When our algorithms improve, we will go bigger.” The new Emergent Vision Technologies cameras are integral to this work. With models supporting up to 542fps at 5120×4096 resolution and interface options ranging up to 100GigE, Fellingham has not had to worry about caps on camera resolution, data rates, or frame rates. Those advantages mean Condense Reality is well positioned to deliver even better content and user experiences.

Software: The Secret Sauce

Condense Reality’s software is a completely proprietary offering that creates a 3D mesh comprised of hundreds of thousands of polygons. The nodes placed on an object represent the surface of the object being captured, then the software ´paints´ that mesh with data acquired by the cameras that estimates the remaining parts of the objects that the cameras did not cover using deep learning. Compression algorithms then reduce that mesh down to as small a size as possible, for every frame, said Fellingham. “The software takes all of this data and turns it into a 3D model at an extremely quick pace, and it can only do this because of well-optimized algorithms, neural networks, and the Nvidia GPUs,” he said. “While most neural networks are based in TensorFlow, some of the ones we use in the system need to run very fast, so they’re written directly for the GPU.” He added, “Our neural networks solve very specific problems which helps when optimizing them for speed. We don’t deploy a huge black box that performs a ton of inference, as this would be very hard to optimize.” To finish the process, data is sent to Condense Reality’s cloud-based distribution platform which takes the data and puts it into a variable bit rate so that the stream is different depending on the user device, and that playback happens inside a game engine, this allows customers to build custom experiences which could be VR or AR around the volumetric video. Because Condense Reality are supporting game engines, their content can also be streamed into existing game worlds owned by other companies. Currently the system supports Unity and Unreal game engines, but the company plans to build plug-ins for its software for any new game engines that could emerge in the future. “These engines can barely be called game engines anymore, as they’re really interactive, 3D tools,” said Fellingham. “We route the real-world content into these tools to provide customers with photorealistic 3D, interactive experiences.”