4k cameras with 542fps at 21MP

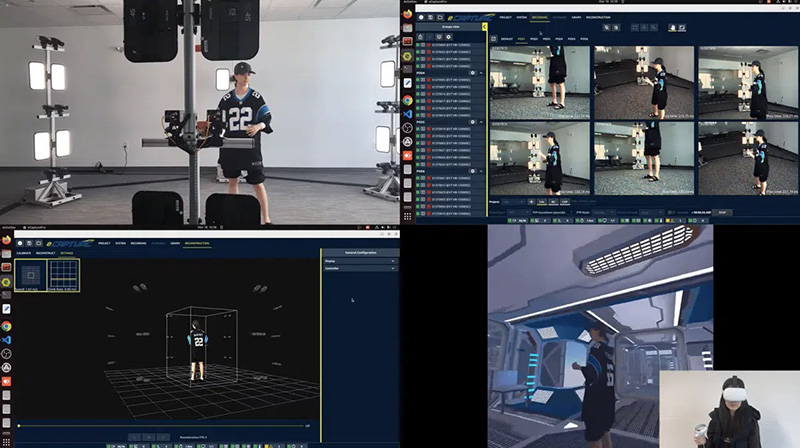

Condense Reality serves as a system integrator for customer projects. A standard system uses 32 cameras, one high-speed network switch from Mellanox, and a single graphics processing unit (GPU) from Nvidia to cover a 7x7m capture. The company worked with Emergent Vision Technologies on putting together the optimal system for volumetric capture. “We don’t necessarily want to be committed to very specific hardware configurations, but by working with the Emergent team and testing different components, we’ve found that Nvidia and Mellanox work best for us.” Along with implementing its technology, the company is working to increase the capture area for MR while maintaining throughput and quality. “When you start to get bigger than a 10x10m area, 4K cameras don’t cut it,” Fellingham said. “When our algorithms improve, we will go bigger.” The new Emergent Vision Technologies cameras are integral to this work. With models supporting up to 542fps at 5120×4096 resolution and interface options ranging up to 100GigE, Fellingham has not had to worry about caps on camera resolution, data rates, or frame rates. Those advantages mean Condense Reality is well positioned to deliver even better content and user experiences.

Software: The Secret Sauce

Condense Reality’s software is a completely proprietary offering that creates a 3D mesh comprised of hundreds of thousands of polygons. The nodes placed on an object represent the surface of the object being captured, then the software ´paints´ that mesh with data acquired by the cameras that estimates the remaining parts of the objects that the cameras did not cover using deep learning. Compression algorithms then reduce that mesh down to as small a size as possible, for every frame, said Fellingham. “The software takes all of this data and turns it into a 3D model at an extremely quick pace, and it can only do this because of well-optimized algorithms, neural networks, and the Nvidia GPUs,” he said. “While most neural networks are based in TensorFlow, some of the ones we use in the system need to run very fast, so they’re written directly for the GPU.” He added, “Our neural networks solve very specific problems which helps when optimizing them for speed. We don’t deploy a huge black box that performs a ton of inference, as this would be very hard to optimize.” To finish the process, data is sent to Condense Reality’s cloud-based distribution platform which takes the data and puts it into a variable bit rate so that the stream is different depending on the user device, and that playback happens inside a game engine, this allows customers to build custom experiences which could be VR or AR around the volumetric video. Because Condense Reality are supporting game engines, their content can also be streamed into existing game worlds owned by other companies. Currently the system supports Unity and Unreal game engines, but the company plans to build plug-ins for its software for any new game engines that could emerge in the future. “These engines can barely be called game engines anymore, as they’re really interactive, 3D tools,” said Fellingham. “We route the real-world content into these tools to provide customers with photorealistic 3D, interactive experiences.”